Autograd.grad accumulates gradients on sequence of Tensor making it hard to calculate Hessian matrix - autograd - PyTorch Forums

Autograd.grad accumulates gradients on sequence of Tensor making it hard to calculate Hessian matrix - autograd - PyTorch Forums

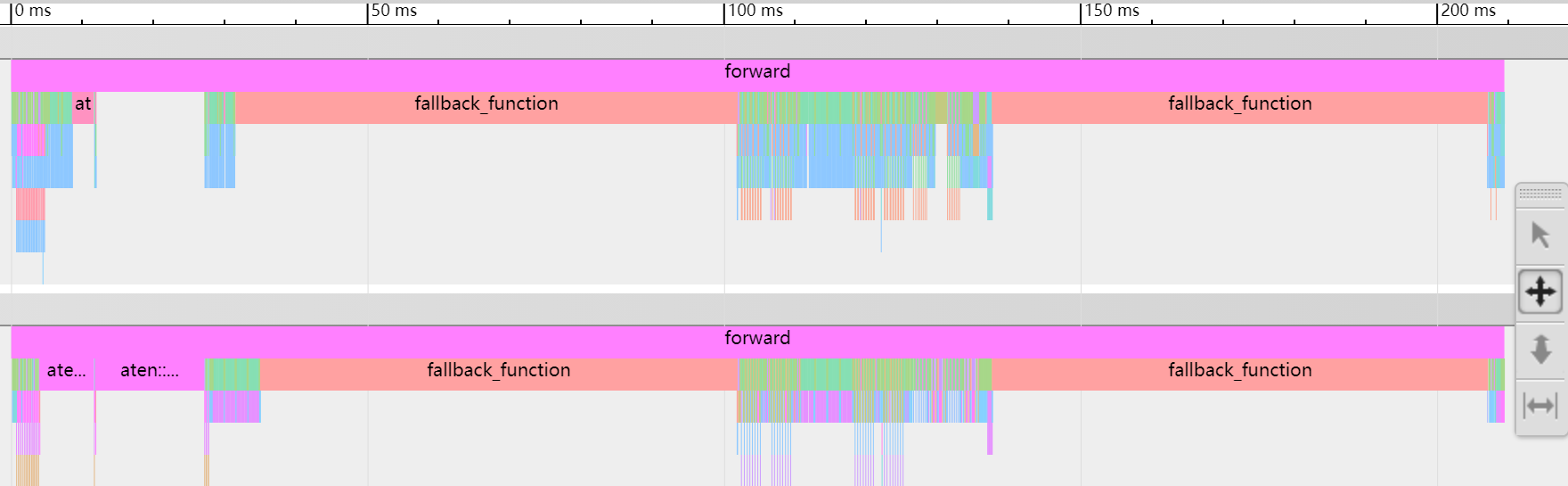

What does fallback_function actually meaning when torch.autograd.profiler.profile called - autograd - PyTorch Forums

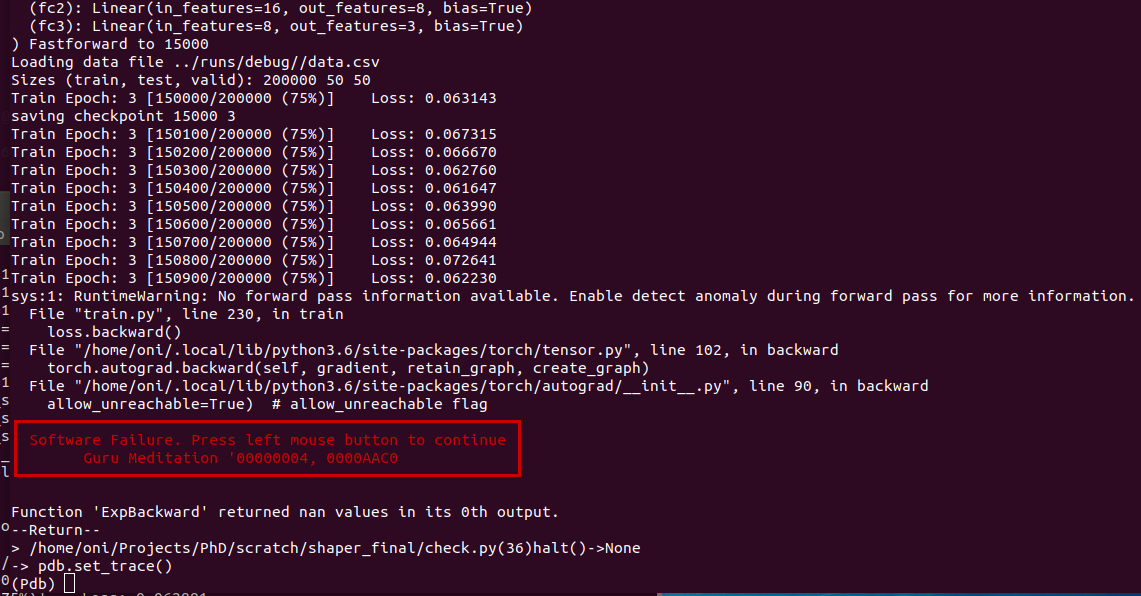

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.FloatTensor [1, 512, 4, 4]] is at version 3; expected version 2 instead - autograd - PyTorch Forums